⚠️转载自:https://jiangxl.blog.csdn.net/article/details/120428703

仅略作修改。

[toc]

# 1.环境准备

# 1.1.Kubernetes高可用集群部署方式

目前生产环境部署Kubernetes建主要有两种方式:

kubeadm:提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群,kubeadm安装的k8s集群,所有的k8s组件都是以pod形式运行。

二进制包:从github上下载发行版的二进制包,手动部署每个组件,组成kubernetes集群。

Kubeadm降低部署成本,从而屏蔽了很多细节,遇到问题很难排查,如果想更容易可控,推荐使用二进制包部署Kubernetes集群,虽然手动部署麻烦点,期间可以学习很多工作原理,也利于后期维护。

# 1.2.Kubernetes集群弃用docker容器

在k8s平台中,为了解决与容器运行时,比如docker的集成问题,在早期社区推出CRI接口,以支持更多的容器,当我们使用Docker作为容器运行时,首先kubelet调用dockershim的CRI容器接口连接docker进程,最后由docker启动容器。

在k8s1.23版本中,k8s计划弃用kubelet中的dockershim接口,dockershim接口一旦弃用,kubelet去调用CRL时就没有可以与docker建立连接的一个接口,从而导致k8s弃用docker容器。

# 1.3.Kubernetes集群所需的证书

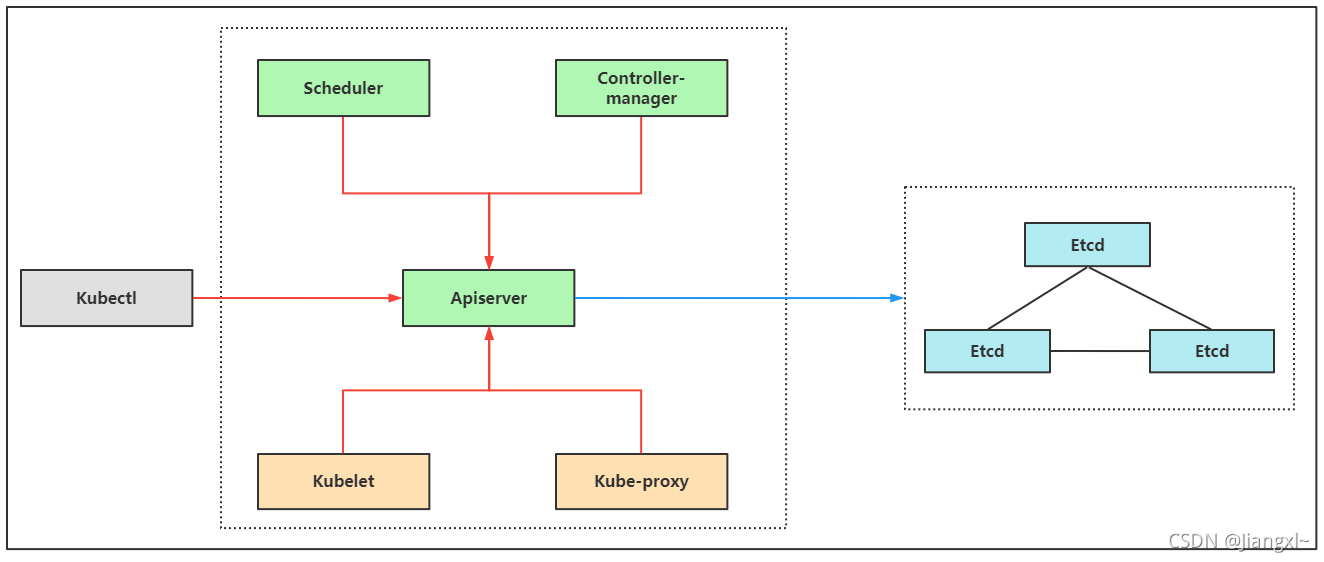

k8s所有组件均采用https加密通信,这些组件一般由两套根证书生成:一个用于k8s apiserver一个用于etcd数据库。

按照角色来分,证书分为管理节点和工作节点。

- 管理节点:指controller-manager和scheduler连接apiserver所需的客户端证书。

- 工作节点:指kubelet和kube-proxy连接apiserver所需要的客户端证书,而一般都会启用Bootstrap TLS机制,所以kubelet的证书初次启动会向apiserver申请颁发证书,由controller-manager组件自动颁发。

- 图中红线是k8s各个组件通过携带k8s自建证书颁发机构生成的客户端证书访问apiserver,图中蓝线是k8sapiserver组件通过etcd颁发的客户端证书与etcd建立连接。

# 1.4.环境准备

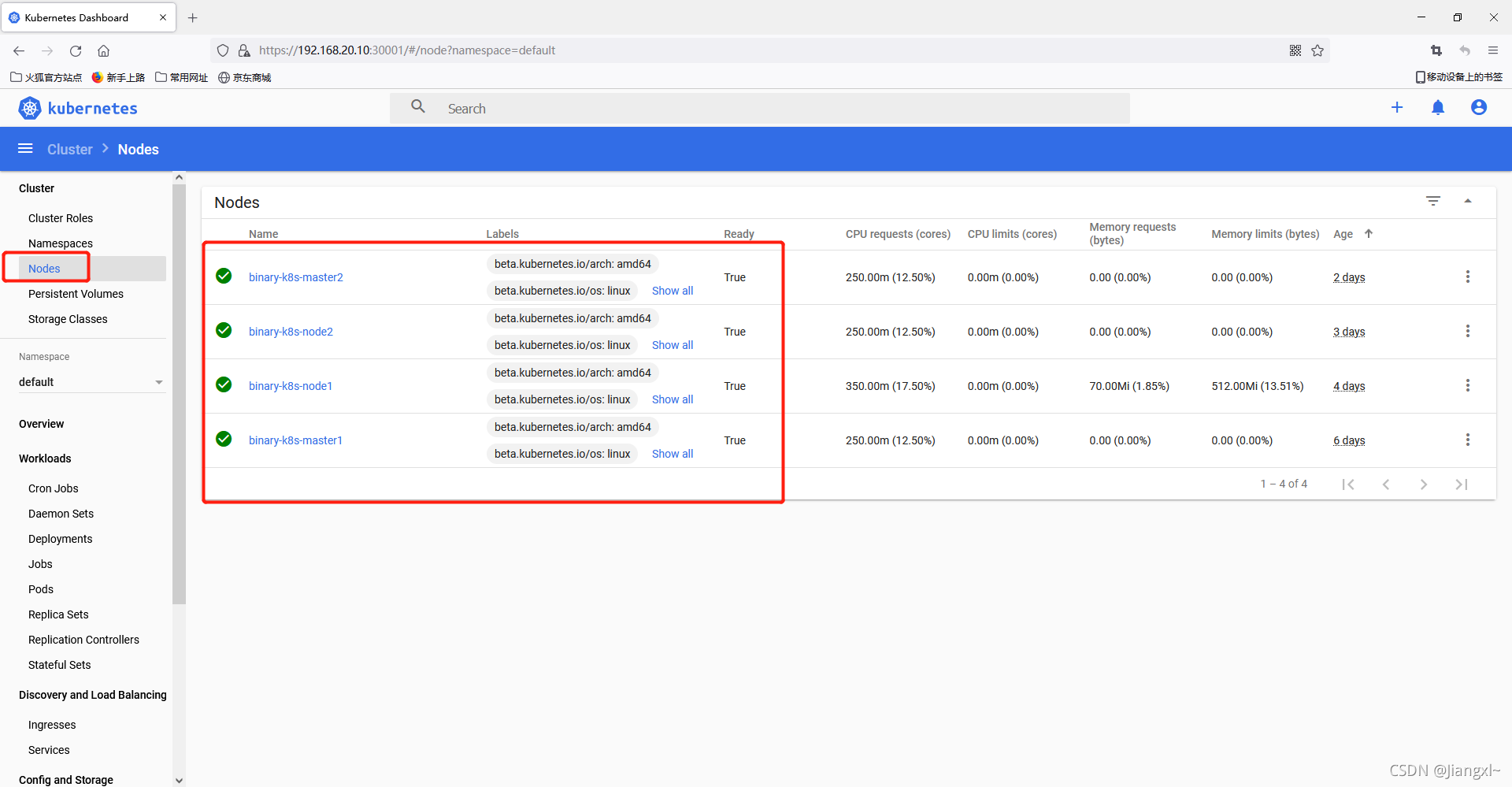

| 角色 | IP | 组件 |

|---|---|---|

| binary-k8s-master1 | 192.168.20.10 | kube-apiserver、kube-controller-manage、kube-scheduler、kubelet、kube-proxy、docker、etcd、nginx、keepalived |

| binary-k8s-master2 | 192.168.20.11 | kube-apiserver、kube-controller-manage、kube-scheduler、kubelet、kube-proxy、docker、nginx、keepalived、etcd(扩容节点) |

| binary-k8s-node1 | 192.168.20.12 | kubelet、kube-proxy、docker、etcd |

| binary-k8s-node2 | 192.168.20.13 | kubelet、kube-proxy、docker、etcd |

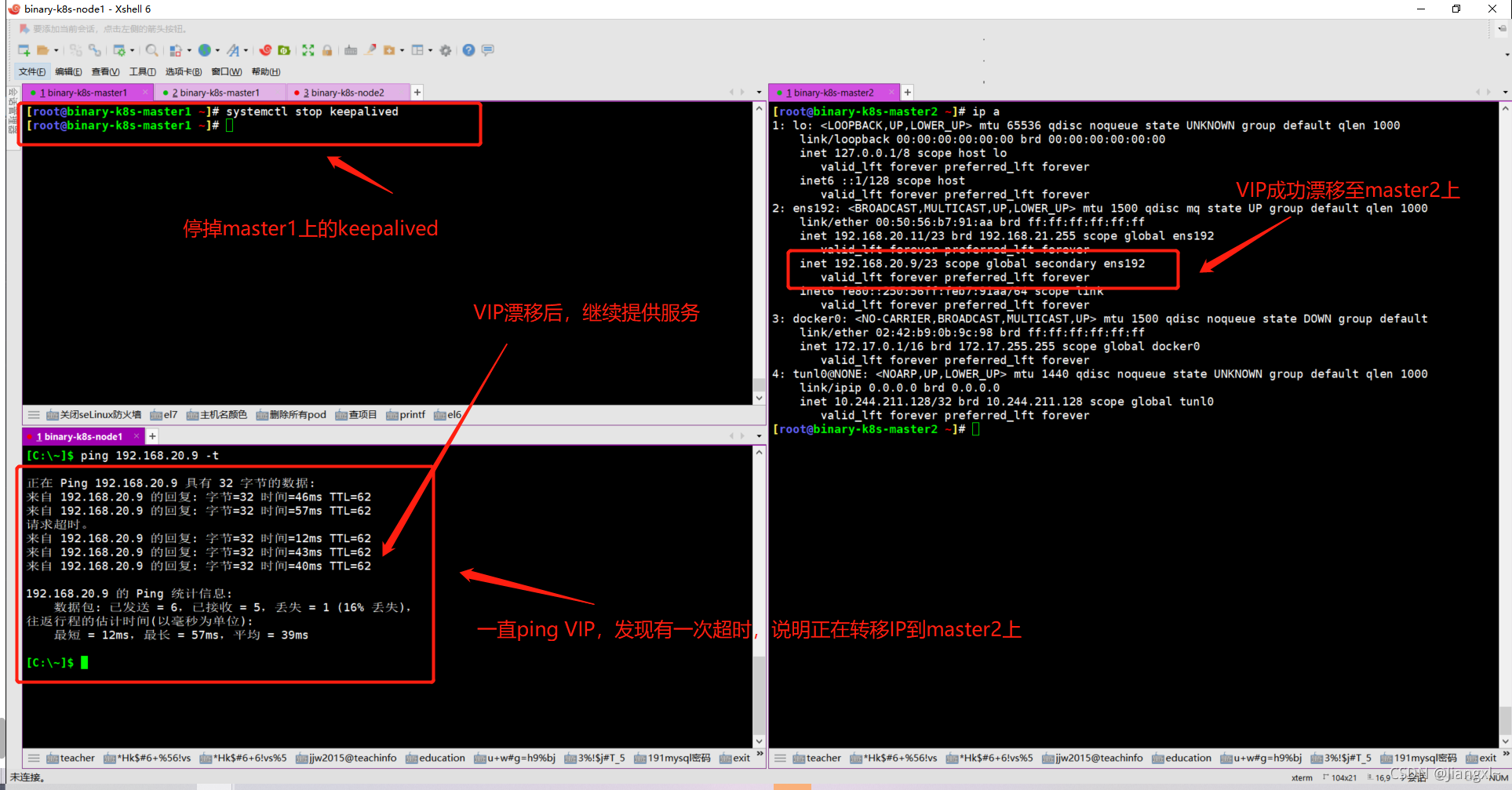

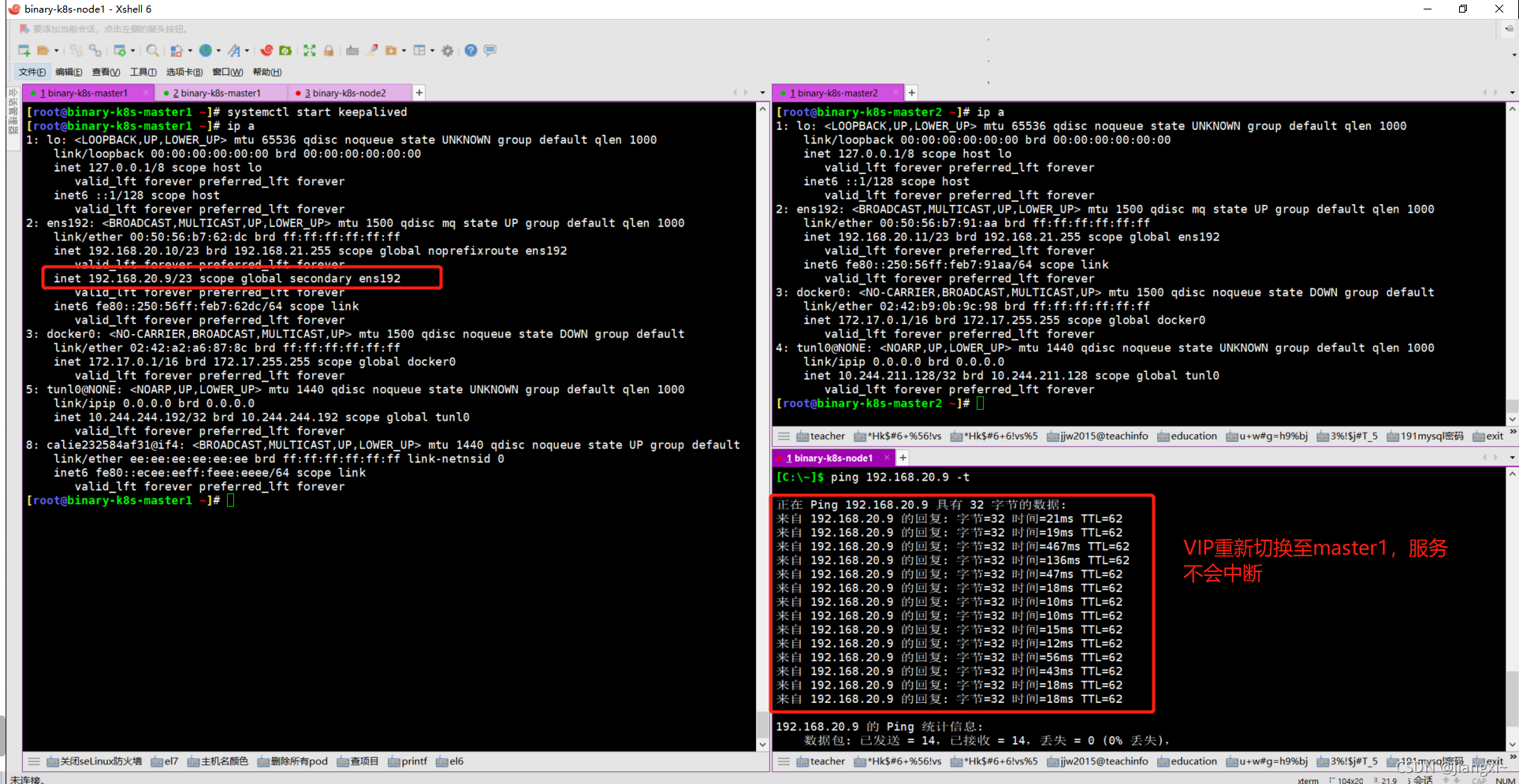

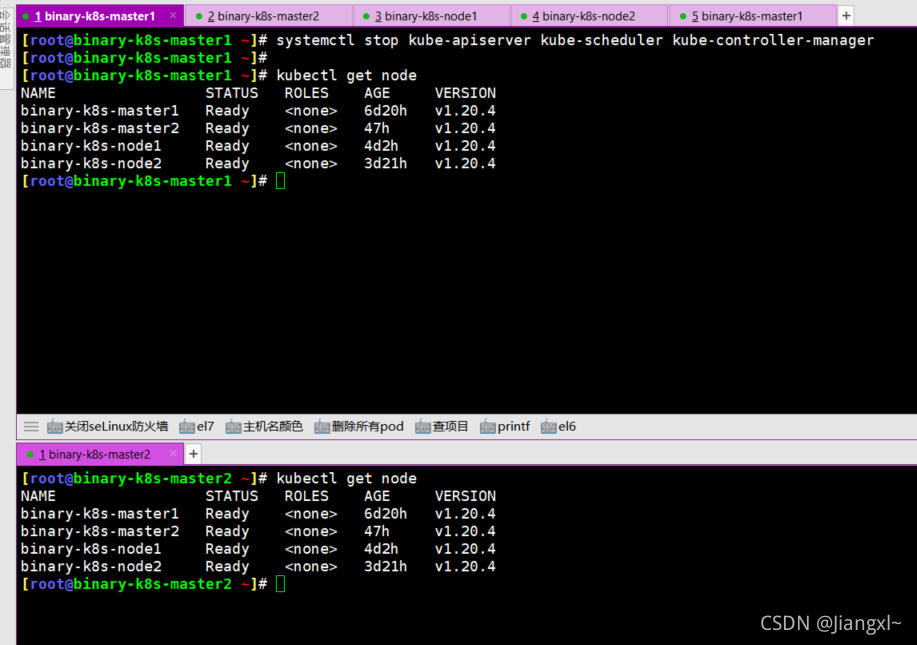

| 负载均衡器IP | 192.168.20.9 | (作用于kube-apiserver的地址) |

首先部署一套单master节点的kubernetes集群,然后在增加一台master节点,形成高可用集群。

单master节点的kubernetes集群服务器规划。

| 角色 | IP | 组件 |

|---|---|---|

| binary-k8s-master1 | 192.168.20.10 | kube-apiserver、kube-controller-manage、kube-schedule、etcd |

| binary-k8s-node1 | 192.168.20.12 | kubelet、kube-proxy、docker、etcd |

| binary-k8s-node2 | 192.168.20.13 | kubelet、kube-proxy、docker、etcd |

# 1.5.安装cfssl证书生成工具

cfssl是一个开源的证书管理工具,使用json文件生成证书,相比openssl更方便使用。

[root@binary-k8s-master1 ~]\# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@binary-k8s-master1 ~]\# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[root@binary-k8s-master1 ~]\# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

[root@binary-k8s-master1 ~]\# chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

[root@binary-k8s-master1 ~]\# mv cfssl_linux-amd64 /usr/local/bin/cfssl

[root@binary-k8s-master1 ~]\# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

[root@binary-k8s-master1 ~]\# mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

2

3

4

5

6

7

8

9

# 2.操作系统初始化配置

1.关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

2.关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

3.关闭交换分区

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

4.配置hosts

cat >> /etc/hosts << EOF

192.168.20.10 binary-k8s-master1

192.168.20.12 binary-k8s-node1

192.168.20.13 binary-k8s-node2

EOF

5.优化内核参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

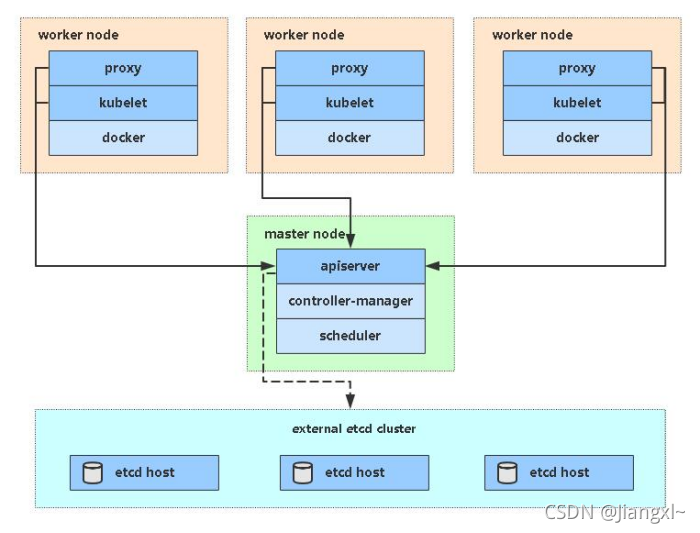

# 3.部署Etcd集群

etcd是一个分布式键值存储系统,kubernetes使用etcd进行数据存储,为解决etcd单点故障,采用集群方式部署,3台组组建集群,可以坏1台,如果有5台可以坏2台。

| 节点名称 | IP |

|---|---|

| etcd-1 | 192.168.20.10 |

| etcd-2 | 192.168.20.12 |

| etcd-3 | 192.168.20.13 |

# 3.1.使用cfssl证书工具生成etcd证书

1.生成CA自签颁发机构证书

[root@binary-k8s-master1 ~/TLS/etcd]\# vim ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

[root@binary-k8s-master1 ~/TLS/etcd]\# vim ca-csr.json

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

[root@binary-k8s-master1 ~/TLS/etcd]\# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2021/08/27 17:16:49 [INFO] generating a new CA key and certificate from CSR

2021/08/27 17:16:49 [INFO] generate received request

2021/08/27 17:16:49 [INFO] received CSR

2021/08/27 17:16:49 [INFO] generating key: rsa-2048

2021/08/27 17:16:49 [INFO] encoded CSR

2021/08/27 17:16:49 [INFO] signed certificate with serial number 595276170535764345591605360849177409156623041535

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

2.使用自签CA签发Etcd HTTPS证书

申请证书的json文件中有一个hosts字段,这个字段的值就是etcd集群的IP地址,可以多写几个IP,作为预留IP,方便扩容etcd集群。

1.创建证书申请文件

[root@binary-k8s-master1 ~/TLS/etcd]\# vim server-csr.json

{

"CN": "etcd",

"hosts": [

"192.168.20.10",

"192.168.20.11", #预留ip

"192.168.20.12",

"192.168.20.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

2.生成证书

[root@binary-k8s-master1 ~/TLS/etcd]\# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

2021/08/27 17:17:08 [INFO] generate received request

2021/08/27 17:17:08 [INFO] received CSR

2021/08/27 17:17:08 [INFO] generating key: rsa-2048

2021/08/27 17:17:08 [INFO] encoded CSR

2021/08/27 17:17:08 [INFO] signed certificate with serial number 390637014214409356442509482537912246480465374076

2021/08/27 17:17:08 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

3.查看生产的证书文件

[root@binary-k8s-master1 ~/TLS/etcd]\# ll

总用量 36

-rw-r--r--. 1 root root 288 8月 27 17:16 ca-config.json

-rw-r--r--. 1 root root 956 8月 27 17:16 ca.csr

-rw-r--r--. 1 root root 210 8月 27 17:16 ca-csr.json

-rw-------. 1 root root 1675 8月 27 17:16 ca-key.pem

-rw-r--r--. 1 root root 1265 8月 27 17:16 ca.pem

-rw-r--r--. 1 root root 1021 8月 27 17:17 server.csr

-rw-r--r--. 1 root root 311 8月 27 17:17 server-csr.json

-rw-------. 1 root root 1679 8月 27 17:17 server-key.pem

-rw-r--r--. 1 root root 1346 8月 27 17:17 server.pem

2

3

4

5

6

7

8

9

10

11

# 3.2.部署etcd集群

1.下载etcd二进制文件

下载地址:https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

部署二进制的程序集群最简单的方式就是在其中一台上面部署,然后将所有的文件scp到其他机器上修改配置,一套集群也就完成了。

将下载好的文件上传至所有etcd节点。

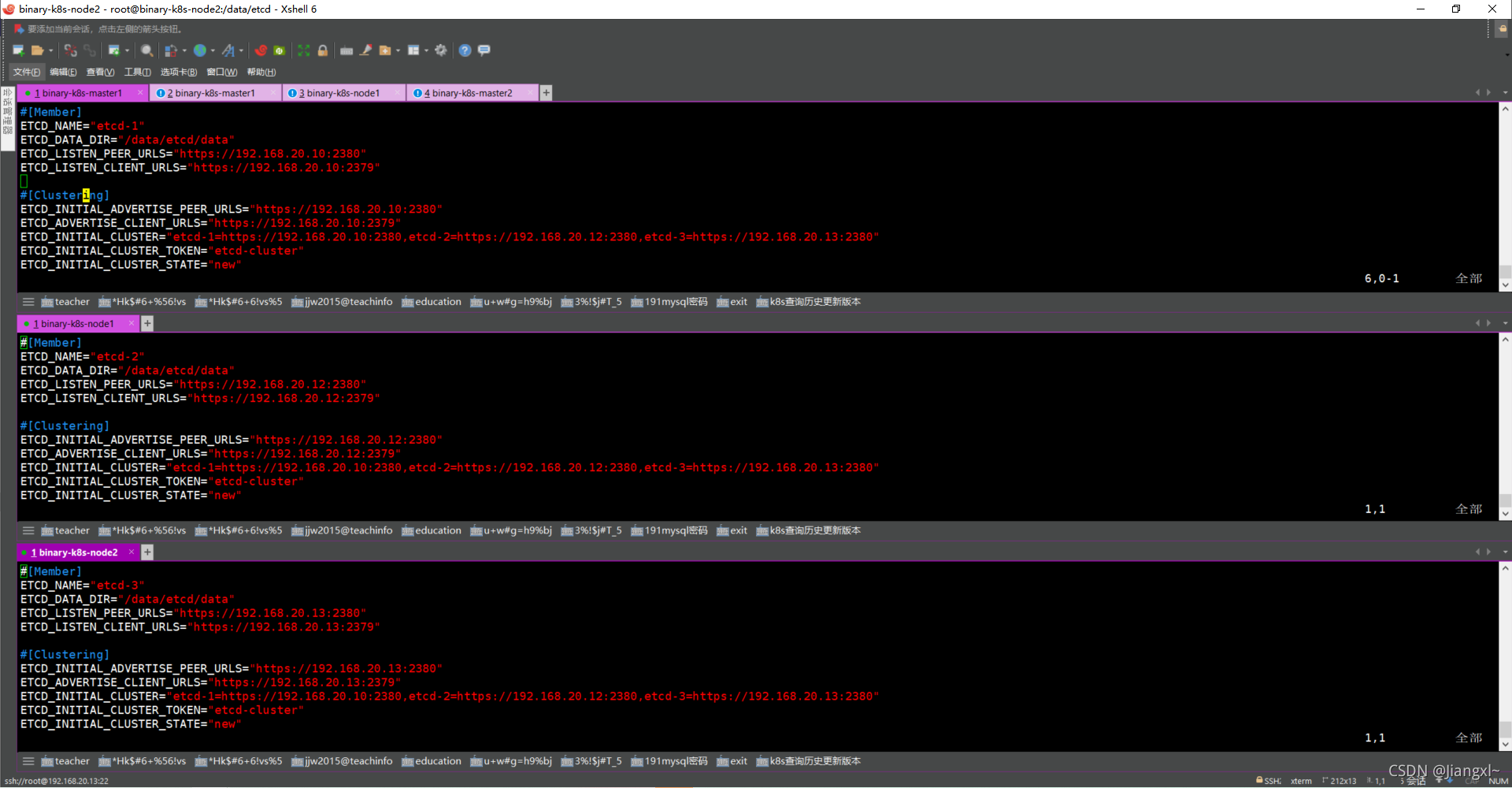

etcd配置文件解释

#[Member]

ETCD_NAME="etcd-1" #节点名称

ETCD_DATA_DIR="/data/etcd/data" #数据目录

ETCD_LISTEN_PEER_URLS="https://192.168.20.10:2380" #集群通信地址

ETCD_LISTEN_CLIENT_URLS="https://192.168.20.10:2379,http://127.0.0.1:2379" #客户端访问的监听地址,在这里加一个http://127.0.0.1:2379,在当前节点查集群信息时就不需要指定证书去查询了

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.20.10:2380" #集群通告地址

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.20.10:2379,http://127.0.0.1:2379" #客户端通告地址,,在这里加一个http://127.0.0.1:2379,在当前节点查集群信息时就不需要指定证书去查询了

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.20.10:2380,etcd-2=https://192.168.20.12:2380,etcd-3=https://192.168.20.13:2380" #集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" #集群的唯一标识

ETCD_INITIAL_CLUSTER_STATE="new" #加入集群的状态,new为新集群,existing表示加入现有集群

2

3

4

5

6

7

8

9

10

11

12

13

2.部署etcd-1节点

1.创建程序目录

[root@binary-k8s-master1 ~]\# mkdir /data/etcd/{bin,conf,ssl,data} -p

2.解压二进制文件

[root@binary-k8s-master1 ~]\# tar xf etcd-v3.4.9-linux-amd64.tar.gz

3.将二进制命令移动到制定出程序目录

[root@binary-k8s-master1 ~]\# mv etcd-v3.4.9-linux-amd64/etcd* /data/etcd/bin/

4.编辑配置文件

[root@binary-k8s-master1 ~]\# vim /data/etcd/conf/etcd.conf

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/data/etcd/data"

ETCD_LISTEN_PEER_URLS="https://192.168.20.10:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.20.10:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.20.10:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.20.10:2379,http://127.0.0.1:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.20.10:2380,etcd-2=https://192.168.20.12:2380,etcd-3=https://192.168.20.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

5.编写systemctl控制脚本

[root@binary-k8s-master1 ~]\# vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/data/etcd/conf/etcd.conf

ExecStart=/data/etcd/bin/etcd \

--cert-file=/data/etcd/ssl/server.pem \

--key-file=/data/etcd/ssl/server-key.pem \

--peer-cert-file=/data/etcd/ssl/server.pem \

--peer-key-file=/data/etcd/ssl/server-key.pem \

--trusted-ca-file=/data/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/data/etcd/ssl/ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

6.复制证书文件

[root@binary-k8s-master1 ~]\# cp TLS/etcd/*.pem /data/etcd/ssl/

7.启动etcd-1节点

[root@binary-k8s-master1 ~]\# systemctl daemon-reload

[root@binary-k8s-master1 ~]\# systemctl start etcd

[root@binary-k8s-master1 ~]\# systemctl enable etcd

#第一个节点启动会一直处于其中中的状态,只有当第二个节点也启动了,第一个节点才能启动成功,因为集群版的etcd至少需要2个节点才能成功运行

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

3.配置etcd-2节点和etcd-3节点

部署完一个节点,可以直接将目录拷贝至其他节点,省去安装的一些步骤。

1.推送etcd目录

[root@binary-k8s-master1 ~]\# scp -rp /data/etcd root@192.168.20.12:/data

[root@binary-k8s-master1 ~]\# scp -rp /data/etcd root@192.168.20.13:/data

2.推送systemctl启动文件

[root@binary-k8s-master1 ~]\# scp -rp /usr/lib/systemd/system/etcd.service root@192.168.20.12:/usr/lib/systemd/system/

[root@binary-k8s-master1 ~]\# scp -rp /usr/lib/systemd/system/etcd.service root@192.168.20.13:/usr/lib/systemd/system/

3.修改etcd-2配置文件

[root@binary-k8s-node1 ~]\# vim /data/etcd/conf/etcd.conf

#[Member]

ETCD_NAME="etcd-2"

ETCD_DATA_DIR="/data/etcd/data"

ETCD_LISTEN_PEER_URLS="https://192.168.20.12:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.20.12:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.20.12:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.20.12:2379,http://127.0.0.1:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.20.10:2380,etcd-2=https://192.168.20.12:2380,etcd-3=https://192.168.20.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

4.修改etcd-3配置文件

[root@binary-k8s-node2 ~]\# vim /data/etcd/conf/etcd.conf

#[Member]

ETCD_NAME="etcd-3"

ETCD_DATA_DIR="/data/etcd/data"

ETCD_LISTEN_PEER_URLS="https://192.168.20.13:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.20.13:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.20.13:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.20.13:2379,http://127.0.0.1:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.20.10:2380,etcd-2=https://192.168.20.12:2380,etcd-3=https://192.168.20.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

5.启动etcd-1和etcd-2

[root@binary-k8s-node1 ~]\# systemctl daemon-reload

[root@binary-k8s-node1 ~]\# systemctl start etcd

[root@binary-k8s-node1 ~]\# systemctl enable etcd

------------

[root@binary-k8s-node2 ~]\# systemctl daemon-reload

[root@binary-k8s-node2 ~]\# systemctl start etcd

[root@binary-k8s-node2 ~]\# systemctl enable etcd

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

4.查看集群状态

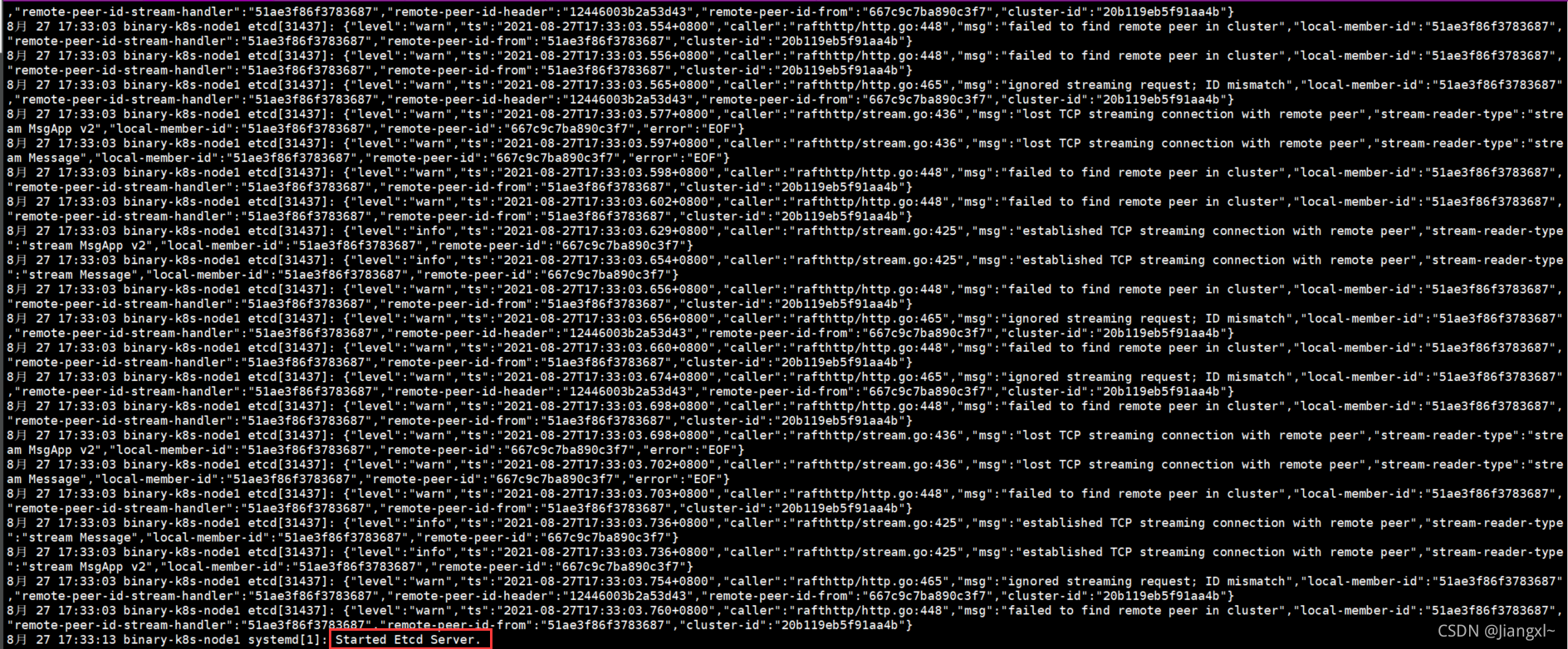

etcd-1启动时会一直处于等待状态,当etcd-2执行启动命令时会立即启动成功,并且etcd-1也会立刻启动成功。

查看etcd的日志可以使用这个命令:[root@binary-k8s-master1 ~]\# journalctl -u etcd -f

1.查看服务端口

[root@binary-k8s-master1 ~]\# netstat -lnpt | grep etcd

tcp 0 0 192.168.20.10:2379 0.0.0.0:* LISTEN 9625/etcd

tcp 0 0 192.168.20.10:2380 0.0.0.0:* LISTEN 9625/etcd

2.查看集群状态

#如果配置文件中2379端口没有加一个127.0.0.1则这样查看集群状态

[root@binary-k8s-master1 ~]\# ETCDCTL_API=3 /data/etcd/bin/etcdctl --cacert=/data/etcd/ssl/ca.pem --cert=/data/etcd/ssl/server.pem --key=/data/etcd/ssl/server-key.pem --endpoints="https://192.168.20.10:2379,https://192.168.20.12:2379,https://192.168.20.13:2379" endpoint health --write-out=table

+----------------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+----------------------------+--------+-------------+-------+

| https://192.168.20.10:2379 | true | 32.322714ms | |

| https://192.168.20.12:2379 | true | 31.524079ms | |

| https://192.168.20.13:2379 | true | 38.985949ms | |

+----------------------------+--------+-------------+-------+

#如果配置文件汇总2379端口加了一个127.0.0.1则可以使用如下方式查看集群信息无需指定证书

[root@binary-k8s-master1 /data/etcd/conf]\# /data/etcd/bin/etcdctl member list --write-out=table

+------------------+---------+--------+----------------------------+---------------------------------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+--------+----------------------------+---------------------------------------------------+------------+

| 12446003b2a53d43 | started | etcd-2 | https://192.168.20.12:2380 | https://127.0.0.1:2379,https://192.168.20.12:2379 | false |

| 51ae3f86f3783687 | started | etcd-1 | https://192.168.20.10:2380 | http://127.0.0.1:2379,https://192.168.20.10:2379 | false |

| 667c9c7ba890c3f7 | started | etcd-3 | https://192.168.20.13:2380 | http://127.0.0.1:2379,https://192.168.20.13:2379 | false |

+------------------+---------+--------+----------------------------+---------------------------------------------------+------------+

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

配置文件状态

etcd启动成功的日志

# 4.部署Docker服务

所有kubernetes节点都需要安装docker服务,包括master和node节点。

docker二进制文件下载地址:https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz

# 4.1.安装docker

1.解压二进制包

tar zxf docker-19.03.9.tgz

2.将可执行命令移动到系统路径

mv docker/* /usr/bin

3.创建配置文件

mkdir /etc/docker

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://9wn5tbfh.mirror.aliyuncs.com"]

}

2

3

4

5

6

7

8

9

10

11

12

# 4.2.为docker创建systemctl启动脚本

1.编写启动脚本

vim /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

2.启动docker

systemctl daemon-reload

systemctl start docker

systemctl enable docker

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

# 5.部署kubernetes master节点

部署二进制的kubernetes组件大致可分为如下几个步骤:

- 1.解压二进制文件

- 2.复制二进制程序到指定目录

- 3.创建组件配置文件

- 4.生成组件的kubeconfig文件

- 5.创建systemctl脚本管理服务

- 6.启动组件

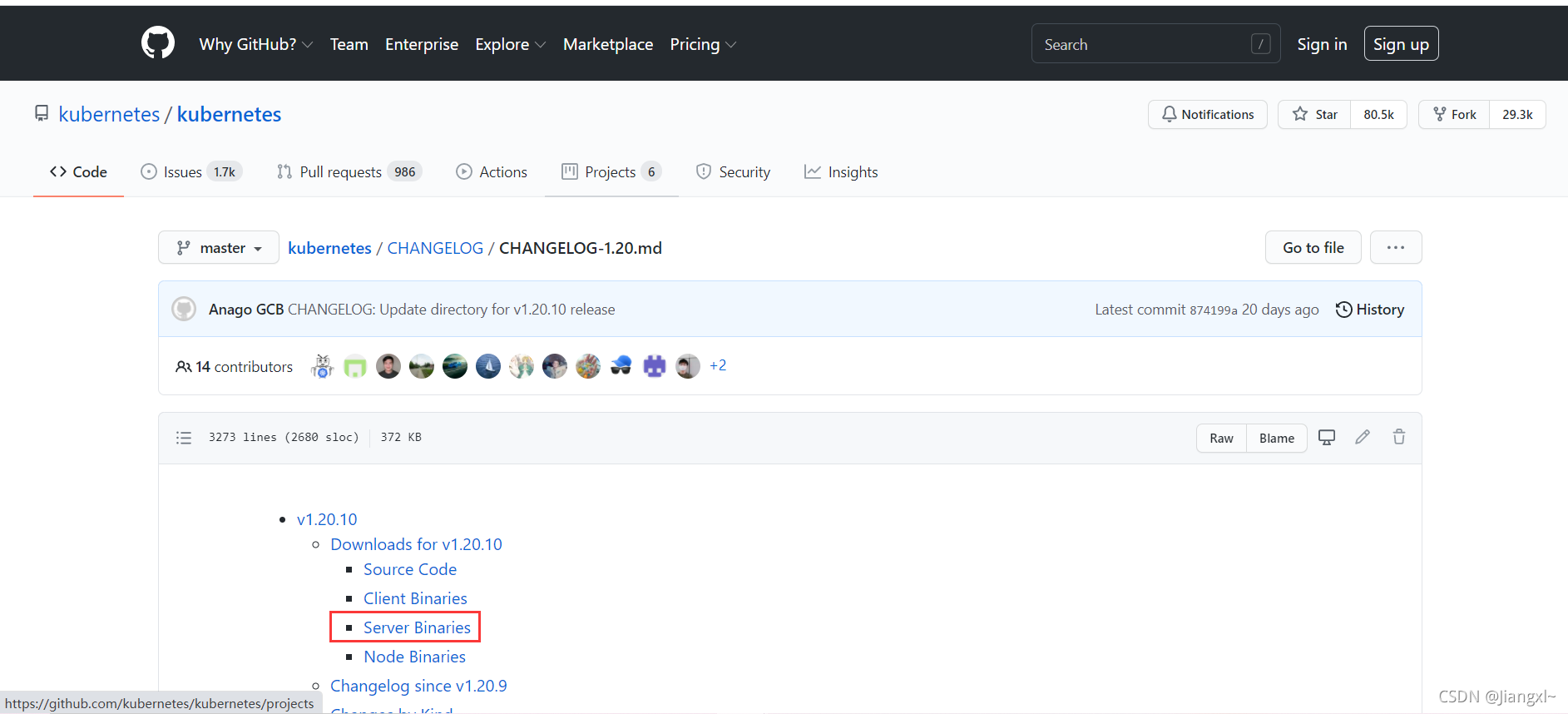

kubernetes集群的master节点和node节点的二进制文件都从github上下载,master和node相关的所有组件都在一个程序包中。

下载地址: https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.20.md

# 5.1.使用cfssl生成apiserver的证书文件

1.生成CA自签颁发机构证书

1.准备CA配置文件

[root@binary-k8s-master1 ~/TLS/k8s]\# vim ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

[root@binary-k8s-master1 ~/TLS/k8s]\# vim ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

2.生成证书文件

[root@binary-k8s-master1 ~/TLS/k8s]\# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2021/09/01 16:20:42 [INFO] generating a new CA key and certificate from CSR

2021/09/01 16:20:42 [INFO] generate received request

2021/09/01 16:20:42 [INFO] received CSR

2021/09/01 16:20:42 [INFO] generating key: rsa-2048

2021/09/01 16:20:43 [INFO] encoded CSR

2021/09/01 16:20:43 [INFO] signed certificate with serial number 90951268335404710707183639990677546638148434604

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

2.使用自签CA签发apiserver HTTPS证书

签发的客户端证书配置文件中的hosts字段要包含所有Master/LB/VIP的IP地址,Node节点的地址可写可不写。

1.准备客户端配置文件

[root@binary-k8s-master1 ~/TLS/k8s]\# vim kube-apiserver-csr.json

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.20.10",

"192.168.20.11",

"192.168.20.12",

"192.168.20.13",

"192.168.20.9",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

2.生成证书文件

[root@binary-k8s-master1 ~/TLS/k8s]\# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

2021/09/01 16:30:24 [INFO] generate received request

2021/09/01 16:30:24 [INFO] received CSR

2021/09/01 16:30:24 [INFO] generating key: rsa-2048

2021/09/01 16:30:25 [INFO] encoded CSR

2021/09/01 16:30:25 [INFO] signed certificate with serial number 714472722509814799589567099679496298525490716083

2021/09/01 16:30:25 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

3.查看生产的证书文件

[root@binary-k8s-master1 ~/TLS/k8s]\# ll

总用量 36

-rw-r--r--. 1 root root 294 9月 1 16:20 ca-config.json

-rw-r--r--. 1 root root 1001 9月 1 16:20 ca.csr

-rw-r--r--. 1 root root 264 9月 1 16:20 ca-csr.json

-rw-------. 1 root root 1679 9月 1 16:20 ca-key.pem

-rw-r--r--. 1 root root 1359 9月 1 16:20 ca.pem

-rw-r--r--. 1 root root 1277 9月 1 16:30 kube-apiserver.csr

-rw-r--r--. 1 root root 602 9月 1 16:30 kube-apiserver-csr.json

-rw-------. 1 root root 1679 9月 1 16:30 kube-apiserver-key.pem

-rw-r--r--. 1 root root 1643 9月 1 16:30 kube-apiserver.pem

2

3

4

5

6

7

8

9

10

11

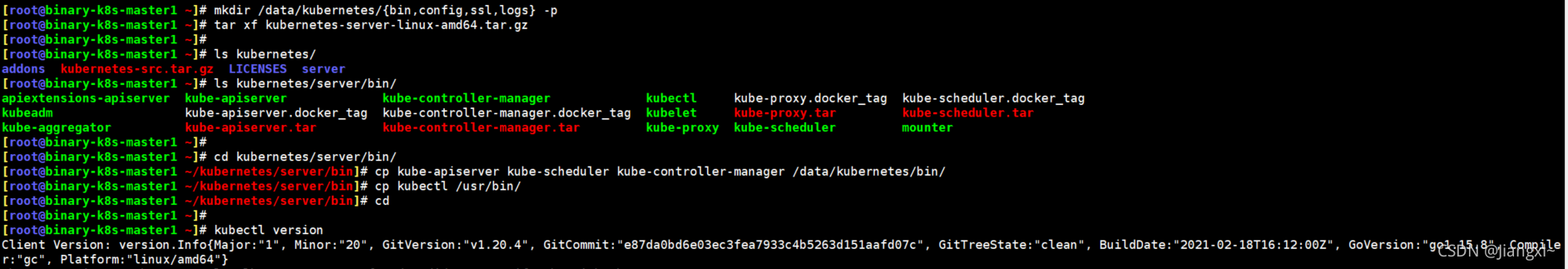

# 5.2.解压二进制文件复制相关组件程序

[root@binary-k8s-master1 ~]\# mkdir /data/kubernetes/{bin,config,ssl,logs} -p

[root@binary-k8s-master1 ~]\# tar xf kubernetes-server-linux-amd64.tar.gz

[root@binary-k8s-master1 ~]\# cd kubernetes/server/bin/

[root@binary-k8s-master1 ~/kubernetes/server/bin]\# cp kube-apiserver kube-scheduler kube-controller-manager /data/kubernetes/bin/

[root@binary-k8s-master1 ~/kubernetes/server/bin]\# cp kubectl /usr/bin/

2

3

4

5

# 5.3.部署kube-apiserver组件

# 5.3.1.创建kube-apiserver配置文件

[root@binary-k8s-master1 ~]\# vim /data/kubernetes/config/kube-apiserver.conf

KUBE_APISERVER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/kubernetes/logs \

--etcd-servers=https://192.168.20.10:2379,https://192.168.20.12:2379,https://192.168.20.13:2379 \

--bind-address=192.168.20.10 \

--secure-port=6443 \

--advertise-address=192.168.20.10 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth=true \

--token-auth-file=/data/kubernetes/config/token.csv \

--service-node-port-range=30000-32767 \

--kubelet-client-certificate=/data/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/data/kubernetes/ssl/kube-apiserver-key.pem \

--tls-cert-file=/data/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/data/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/data/kubernetes/ssl/ca.pem \

--service-account-key-file=/data/kubernetes/ssl/ca-key.pem \

--service-account-issuer=api \

--service-account-signing-key-file=/data/kubernetes/ssl/kube-apiserver-key.pem \

--etcd-cafile=/data/etcd/ssl/ca.pem \

--etcd-certfile=/data/etcd/ssl/server.pem \

--etcd-keyfile=/data/etcd/ssl/server-key.pem \

--requestheader-client-ca-file=/data/kubernetes/ssl/ca.pem \

--proxy-client-cert-file=/data/kubernetes/ssl/kube-apiserver.pem \

--proxy-client-key-file=/data/kubernetes/ssl/kube-apiserver-key.pem \

--requestheader-allowed-names=kubernetes \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=true \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/data/kubernetes/logs/k8s-audit.log"

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

配置文件各参数含义

| 配置参数 | 含义 |

|---|---|

| –logtostderr | 是否开启日志 |

| –v | 日志的等级,等级越高内容越详细 |

| –log-dir | 日志存放路径 |

| –etcd-servers | etcd集群地址 |

| –bind-address | 监听地址,也就是本机 |

| –secure-port | https安全端口 |

| –advertise-address | 集群通告地址 |

| –allow-privileged | 企业授权 |

| –service-cluster-ip-range | service资源IP地址段 |

| –enable-admission-plugins | 准入控制模块 |

| –authorization-mode | 认证授权,启用RBAC授权和节点自管理 |

| –enable-bootstrap-token-auth | 启用TLS bootstrap机制,启用之后kubelet可以自动给node节颁发证书 |

| –token-auth-file | bootstrap token文件路径 |

| –service-node-port-range | Service nodeport类型默认分配端口范围 |

| –kubelet-client-certificate | apiserver访问kubelet的客户端证书文件 |

| –kubelet-client-key | apiserver访问kubelet的客户端私钥文件 |

| –tls-cert-file | apiserver https证书 |

| –tls-private-key-file | apiserver https证书 |

| –client-ca-file | ca证书路径 |

| –service-account-key-file | ca私钥路径 |

| –service-account-issuer | sa账号授权过期时间的一个配置,1.20以后才有的特性 |

| –service-account-signing-key-file | 证书文件路径 |

| –etcd-cafile | etcd ca证书文件路径 |

| –etcd-certfile | etcd 客户端证书文件路径 |

| –etcd-keyfile | etcd 客户端私钥文件路径 |

| –requestheader-client-ca-file | 聚合层相关配置 |

| –proxy-client-cert-file | 聚合层相关配置 |

| –proxy-client-key-file | 聚合层相关配置 |

| –requestheader-allowed-names | 聚合层相关配置 |

| –requestheader-extra-headers-prefix | 聚合层相关配置 |

| –enable-aggregator-routing | 聚合层相关配置 |

# 5.3.2.创建TLS Bootstrapping文件

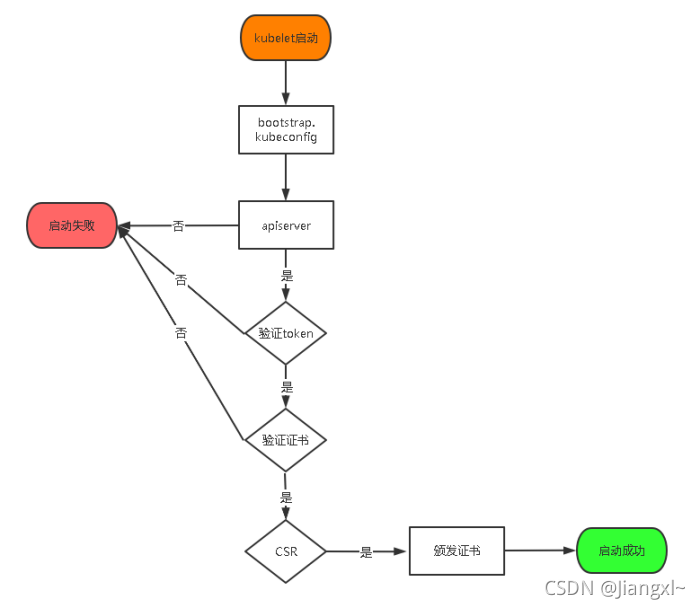

TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。

TLS bootstraping 工作流程:

kubelet首先取查找bootstraping配置文件,然后去连接apiserver,开始验证bootstrap token文件,再验证证书文件,最后颁发证书启动成功,否则就会启动失败。

1.生成一个token值

[root@binary-k8s-master1 ~]\# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

d7f96b0d86c574d0f64a713608db092

2.创建token文件

[root@binary-k8s-master1 ~]\# vim /data/kubernetes/config/token.csv

d7f96b0d86c574d0f64a713608db0922,kubelet-bootstrap,10001,"system:node-bootstrapper"

#格式:token,用户名,UID,用户组

2

3

4

5

6

7

8

9

# 5.3.4.创建systemctl脚本管理apiserver

[root@binary-k8s-master1 ~]\# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/data/kubernetes/config/kube-apiserver.conf

ExecStart=/data/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

2

3

4

5

6

7

8

9

10

11

12

# 5.3.5.启动kube-apiserver组件

1.拷贝我们需要的证书文件

[root@binary-k8s-master1 ~]\# cp TLS/k8s/*.pem /data/kubernetes/ssl/

2.启动kube-apiserver

[root@binary-k8s-master1 ~]\# systemctl daemon-reload

[root@binary-k8s-master1 ~]\# systemctl start kube-apiserver

[root@binary-k8s-master1 ~]\# systemctl enable kube-apiserver

3.查看端口

[root@binary-k8s-master1 ~]\# netstat -lnpt | grep kube

tcp 0 0 192.168.20.10:6443 0.0.0.0:* LISTEN 28546/kube-apiserve

2

3

4

5

6

7

8

9

10

11

# 5.4.部署kube-controller-manage组件

# 5.4.1.创建kube-controller-manage配置文件

配置文件含义

–kubeconfig:指定用于连接apiserver的kubeconfig配置文件

–leader-elect:用于高可用集群,自动选举

–cluster-signing-cert-file:指定CA证书文件,为kubelet自动颁发证书

–cluster-signing-key-file:指定CA私钥文件,为kubelet自动颁发证书

–cluster-signing-duration:证书过期时间

[root@binary-k8s-master1 ~]\# vim /data/kubernetes/config/kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/kubernetes/logs \

--leader-elect=true \

--kubeconfig=/data/kubernetes/config/kube-controller-manager.kubeconfig \

--bind-address=192.168.20.10 \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--service-cluster-ip-range=10.0.0.0/24 \

--cluster-signing-cert-file=/data/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/data/kubernetes/ssl/ca-key.pem \

--root-ca-file=/data/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/data/kubernetes/ssl/ca-key.pem \

--cluster-signing-duration=87600h0m0s"

2

3

4

5

6

7

8

9

10

11

12

13

14

15

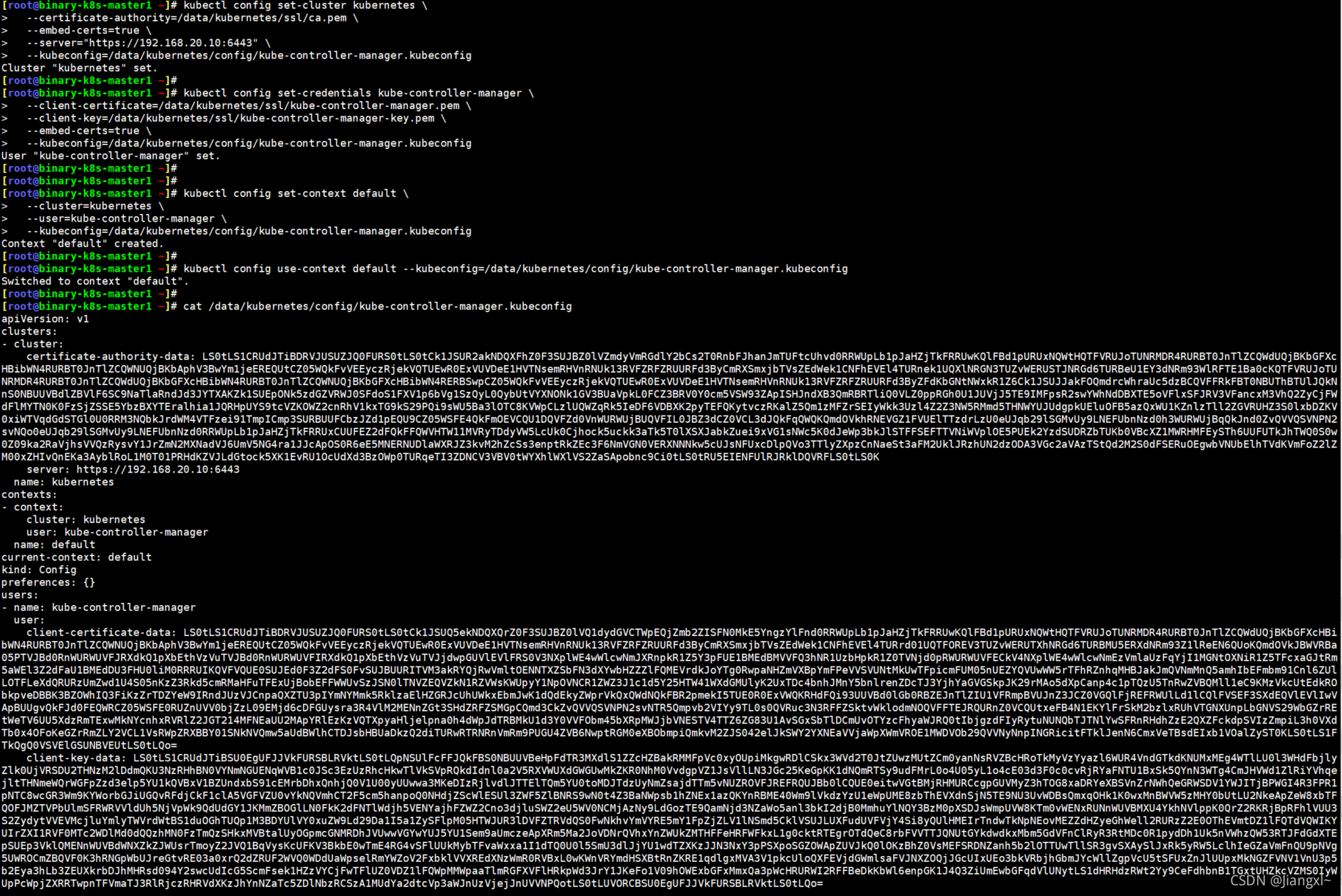

# 5.4.2.生成kubeconfig文件

kube-controller-manage利用kubeconfig配置文件连接apiserver。

kubeconfig文件中包括集群apiserver地址、证书文件、用户。

1.由于kubeconfig需要证书文件的支持,因此要生成一个证书

[root@binary-k8s-master1 ~/TLS/k8s]\# vim kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

[root@binary-k8s-master1 ~/TLS/k8s]\# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

2021/09/01 16:36:18 [INFO] generate received request

2021/09/01 16:36:18 [INFO] received CSR

2021/09/01 16:36:18 [INFO] generating key: rsa-2048

l2021/09/01 16:36:19 [INFO] encoded CSR

2021/09/01 16:36:19 [INFO] signed certificate with serial number 719101376219834763931271155238486242405063666906

2021/09/01 16:36:19 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@binary-k8s-master1 ~/TLS/k8s]\# cp kube-controller-manager*pem /data/kubernetes/ssl/

2.生成kubeconfig文件

#在kubeconfig文件中增加集群apiserver信息

[root@binary-k8s-master1 ~]\# kubectl config set-cluster kubernetes \

--certificate-authority=/data/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server="https://192.168.20.10:6443" \

--kubeconfig=/data/kubernetes/config/kube-controller-manager.kubeconfig

#在kubeconfig文件中增加证书文件信息

[root@binary-k8s-master1 ~]\# kubectl config set-credentials kube-controller-manager \

--client-certificate=/data/kubernetes/ssl/kube-controller-manager.pem \

--client-key=/data/kubernetes/ssl/kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/data/kubernetes/config/kube-controller-manager.kubeconfig

#在kubeconfig文件中增加用户信息

[root@binary-k8s-master1 ~]\# kubectl config set-context default \

--cluster=kubernetes \

--user=kube-controller-manager \

--kubeconfig=/data/kubernetes/config/kube-controller-manager.kubeconfig

3.指定生成的kubeconfig文件为集群使用

[root@binary-k8s-master1 ~]\# kubectl config use-context default --kubeconfig=/data/kubernetes/config/kube-controller-manager.kubeconfig

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

# 5.4.3.创建systemctl脚本管理服务

[root@binary-k8s-master1 ~]\# vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/data/kubernetes/config/kube-controller-manager.conf

ExecStart=/data/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

2

3

4

5

6

7

8

9

10

11

12

# 5.4.4.启动kube-controller-manage组件

1.启动服务

[root@binary-k8s-master1 ~]\# systemctl daemon-reload

[root@binary-k8s-master1 ~]\# systemctl start kube-controller-manager

[root@binary-k8s-master1 ~]\# systemctl enable kube-controller-manager

2.查看端口

[root@binary-k8s-master1 ~]\# netstat -lnpt | grep kube

tcp 0 0 192.168.20.10:6443 0.0.0.0:* LISTEN 28546/kube-apiserve

tcp 0 0 192.168.20.10:10257 0.0.0.0:* LISTEN 28941/kube-controll

tcp6 0 0 :::10252 :::* LISTEN 28941/kube-controll

2

3

4

5

6

7

8

9

10

# 5.5.部署kube-scheduler组件

# 5.5.1.创建kube-scheduler配置文件

配置文件解释

–kubeconfig:指定kubeconfig文件

–leader-elect:选举

[root@binary-k8s-master1 ~]\# vim /data/kubernetes/config/kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/kubernetes/logs \

--leader-elect \

--kubeconfig=/data/kubernetes/config/kube-scheduler.kubeconfig \

--bind-address=192.168.20.10"

2

3

4

5

6

7

# 5.5.2.生成kubeconfig文件

生成kubeconfig连接集群apiserver。

kube-schedule利用kubeconfig配置文件连接apiserver。

kubeconfig文件中包括集群apiserver地址、证书文件、用户。

1.创建证书配置文件

[root@binary-k8s-master1 ~/TLS/k8s]\# vim kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

2.生成证书

[root@binary-k8s-master1 ~/TLS/k8s]\# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2021/09/02 14:50:40 [INFO] generate received request

2021/09/02 14:50:40 [INFO] received CSR

2021/09/02 14:50:40 [INFO] generating key: rsa-2048

2021/09/02 14:50:42 [INFO] encoded CSR

2021/09/02 14:50:42 [INFO] signed certificate with serial number 91388852050290848663498441480862532526947759393

2021/09/02 14:50:42 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

3.查看证书文件

[root@binary-k8s-master1 ~/TLS/k8s]\# ll

总用量 68

-rw-r--r--. 1 root root 294 9月 1 16:20 ca-config.json

-rw-r--r--. 1 root root 1001 9月 1 16:20 ca.csr

-rw-r--r--. 1 root root 264 9月 1 16:20 ca-csr.json

-rw-------. 1 root root 1679 9月 1 16:20 ca-key.pem

-rw-r--r--. 1 root root 1359 9月 1 16:20 ca.pem

-rw-r--r--. 1 root root 1277 9月 1 16:30 kube-apiserver.csr

-rw-r--r--. 1 root root 602 9月 1 16:30 kube-apiserver-csr.json

-rw-------. 1 root root 1679 9月 1 16:30 kube-apiserver-key.pem

-rw-r--r--. 1 root root 1643 9月 1 16:30 kube-apiserver.pem

-rw-r--r--. 1 root root 1045 9月 1 16:36 kube-controller-manager.csr

-rw-r--r--. 1 root root 255 9月 1 16:46 kube-controller-manager-csr.json

-rw-------. 1 root root 1675 9月 1 16:36 kube-controller-manager-key.pem

-rw-r--r--. 1 root root 1436 9月 1 16:36 kube-controller-manager.pem

-rw-r--r--. 1 root root 1029 9月 2 14:50 kube-scheduler.csr

-rw-r--r--. 1 root root 245 9月 2 14:50 kube-scheduler-csr.json

-rw-------. 1 root root 1675 9月 2 14:50 kube-scheduler-key.pem

-rw-r--r--. 1 root root 1424 9月 2 14:50 kube-scheduler.pem

4.拷贝证书文件至指定路径

[root@binary-k8s-master1 ~/TLS/k8s]\# cp kube-scheduler*.pem /data/kubernetes/ssl/

5.生成kubeconfig文件

#在kubeconfig文件中增加集群apiserver信息

[root@binary-k8s-master1 ~]\# kubectl config set-cluster kubernetes \

--certificate-authority=/data/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server="https://192.168.20.10:6443" \

--kubeconfig=/data/kubernetes/config/kube-scheduler.kubeconfig

#在kubeconfig文件中增加证书文件信息

[root@binary-k8s-master1 ~]\# kubectl config set-credentials kube-scheduler \

--client-certificate=/data/kubernetes/ssl/kube-scheduler.pem \

--client-key=/data/kubernetes/ssl/kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/data/kubernetes/config/kube-scheduler.kubeconfig

#在kubeconfig文件中增加用户信息

[root@binary-k8s-master1 ~]\# kubectl config set-context default \

--cluster=kubernetes \

--user=kube-scheduler \

--kubeconfig=/data/kubernetes/config/kube-scheduler.kubeconfig

6.指定生成的kubeconfig文件为集群使用

[root@binary-k8s-master1 ~]\# kubectl config use-context default --kubeconfig=/data/kubernetes/config/kube-scheduler.kubeconfig

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

# 5.5.3.创建systemctl脚本管理服务

[root@binary-k8s-master1 ~]\# vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/data/kubernetes/config/kube-scheduler.conf

ExecStart=/data/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

2

3

4

5

6

7

8

9

10

11

12

# 5.5.4.启动kube-scheduler组件

1.启动服务

[root@binary-k8s-master1 ~]\# systemctl daemon-reload

[root@binary-k8s-master1 ~]\# systemctl start kube-scheduler

[root@binary-k8s-master1 ~]\# systemctl enable kube-scheduler

2.查看端口

[root@binary-k8s-master1 ~]\# netstat -lnpt | grep kube

tcp 0 0 192.168.20.10:6443 0.0.0.0:* LISTEN 28546/kube-apiserve

tcp 0 0 192.168.20.10:10257 0.0.0.0:* LISTEN 28941/kube-controll

tcp 0 0 192.168.20.10:10259 0.0.0.0:* LISTEN 6127/kube-scheduler

tcp6 0 0 :::10251 :::* LISTEN 6127/kube-scheduler

tcp6 0 0 :::10252 :::* LISTEN 28941/kube-controll

2

3

4

5

6

7

8

9

10

11

12

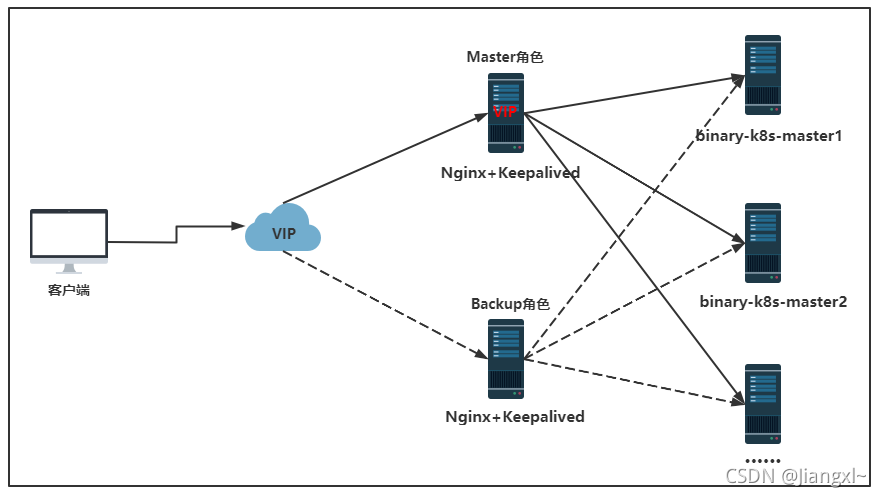

# 5.6.准备kubectl所需的kubeconfig文件连接集群

kubectl想要连接集群对各种资源进行操作,需要有一个kubeconfig文件连接apiserver才可以对集群进行操作,也就是kubeadm安装k8s集群后在master节点生成的/root/.kube目录,这个目录中的config文件就是kubectl用于连接apiserver的kubeconfig文件。

# 5.6.1.生成证书文件

1.创建证书配置文件

[root@binary-k8s-master1 ~/TLS/k8s]\# vim kubectl-csr.json

{

"CN": "kubectl",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

2.生成证书

[root@binary-k8s-master1 ~/TLS/k8s]\# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubectl-csr.json | cfssljson -bare kubectl

2021/09/02 17:20:44 [INFO] generate received request

2021/09/02 17:20:44 [INFO] received CSR

2021/09/02 17:20:44 [INFO] generating key: rsa-2048

2021/09/02 17:20:45 [INFO] encoded CSR

2021/09/02 17:20:45 [INFO] signed certificate with serial number 398472525484598388169457456772550114435870340604

2021/09/02 17:20:45 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

3.查看生成的证书文件

[root@binary-k8s-master1 ~/TLS/k8s]\# ll

总用量 84

-rw-r--r--. 1 root root 294 9月 1 16:20 ca-config.json

-rw-r--r--. 1 root root 1001 9月 1 16:20 ca.csr

-rw-r--r--. 1 root root 264 9月 1 16:20 ca-csr.json

-rw-------. 1 root root 1679 9月 1 16:20 ca-key.pem

-rw-r--r--. 1 root root 1359 9月 1 16:20 ca.pem

-rw-r--r--. 1 root root 1277 9月 1 16:30 kube-apiserver.csr

-rw-r--r--. 1 root root 602 9月 1 16:30 kube-apiserver-csr.json

-rw-------. 1 root root 1679 9月 1 16:30 kube-apiserver-key.pem

-rw-r--r--. 1 root root 1643 9月 1 16:30 kube-apiserver.pem

-rw-r--r--. 1 root root 1045 9月 1 16:36 kube-controller-manager.csr

-rw-r--r--. 1 root root 255 9月 1 16:46 kube-controller-manager-csr.json

-rw-------. 1 root root 1675 9月 1 16:36 kube-controller-manager-key.pem

-rw-r--r--. 1 root root 1436 9月 1 16:36 kube-controller-manager.pem

-rw-r--r--. 1 root root 1013 9月 2 17:20 kubectl.csr

-rw-r--r--. 1 root root 231 9月 2 17:20 kubectl-csr.json

-rw-------. 1 root root 1679 9月 2 17:20 kubectl-key.pem

-rw-r--r--. 1 root root 1403 9月 2 17:20 kubectl.pem

-rw-r--r--. 1 root root 1029 9月 2 14:50 kube-scheduler.csr

-rw-r--r--. 1 root root 245 9月 2 14:50 kube-scheduler-csr.json

-rw-------. 1 root root 1675 9月 2 14:50 kube-scheduler-key.pem

-rw-r--r--. 1 root root 1424 9月 2 14:50 kube-scheduler.pem

4.拷贝证书文件到指定目录

[root@binary-k8s-master1 ~/TLS/k8s]\# \cp kubectl*.pem /data/kubernetes/ssl/

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

# 5.6.2.生成kubeconfig文件

1.在kubeconfig文件中增加集群apiserver信息

[root@binary-k8s-master1 ~]\# kubectl config set-cluster kubernetes \

--certificate-authority=/data/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server="https://192.168.20.10:6443" \

--kubeconfig=/root/.kube/config

2.在kubeconfig文件中增加证书文件信息

[root@binary-k8s-master1 ~]\# kubectl config set-credentials cluster-admin \

--client-certificate=/data/kubernetes/ssl/kubectl.pem \

--client-key=/data/kubernetes/ssl/kubectl-key.pem \

--embed-certs=true \

--kubeconfig=/root/.kube/config

3.在kubeconfig文件中增加用户信息

[root@binary-k8s-master1 ~]\# kubectl config set-context default \

--cluster=kubernetes \

--user=cluster-admin \

--kubeconfig=/root/.kube/config

4.指定生成的kubeconfig文件为集群使用

[root@binary-k8s-master1 ~]\# kubectl config use-context default --kubeconfig=/root/.kube/config

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

# 5.6.3.使用kubectl查看集群连接信息

至此master节点相关组件部署完成。

[root@binary-k8s-master1 ~]\# kubectl get node

No resources found

[root@binary-k8s-master1 ~]\# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

2

3

4

5

6

7

8

9

10

11

# 6.在master节点部署node节点相关组件

# 6.1.在集群授权kubelet-bootstrap用户允许请求证书

在此处做了这一步之后,node节点加入集群时就不需要做了。

[root@binary-k8s-master1 ~]\# kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

2

3

# 6.2.在master节点部署kubelet组件

由于master也需要启动某些pod,比如calico组件都是以pod方式运行的,因此在master节点也需要kubelet和kube-proxy组件。

# 6.2.1.将kubelet和kube-proxy的二进制文件拷贝至对应目录

[root@binary-k8s-master1 ~]\# cp kubernetes/server/bin/{kubelet,kube-proxy} /data/kubernetes/bin/

# 6.2.2.创建kubelet配置文件

配置文件含义:

–hostname-override:节点名称,集群中唯一

–network-plugin:启用CNI网络

–kubeconfig:指定自动生成的kubeconfig文件路径,用于连接apiserver

–bootstrap-kubeconfig:指定首次启动向apiserver申请证书的kubeconfig文件路径

–config:配置参数文件路径

–cert-dir:kubelet证书生成目录路径

–pod-infra-container-image:pod容器的根容器

[root@binary-k8s-master1 ~]\# vim /data/kubernetes/config/kubelet.conf

KUBELET_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/kubernetes/logs \

--hostname-override=binary-k8s-master1 \

--network-plugin=cni \

--kubeconfig=/data/kubernetes/config/kubelet.kubeconfig \

--bootstrap-kubeconfig=/data/kubernetes/config/bootstrap.kubeconfig \

--config=/data/kubernetes/config/kubelet-config.yml \

--cert-dir=/data/kubernetes/ssl \

--pod-infra-container-image=pause-amd64:3.0"

2

3

4

5

6

7

8

9

10

11

# 6.2.3.创建kubelet-config.yaml参数配置文件

kubelet和kube-proxy服务的参数配置是以yaml形式来配置的

[root@binary-k8s-master1 ~]\# vim /data/kubernetes/config/kubelet-config.yml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0 #监听地址

port: 10250 #监听端口

readOnlyPort: 10255

cgroupDriver: cgroupfs #驱动引擎

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /data/kubernetes/ssl/ca.pem #ca证书文件路径

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110 #可运行的pod的数量

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

# 6.2.4.创建bootstrap-kubeconfig文件

1.在kubeconfig文件中增加集群apiserver信息

[root@binary-k8s-master1 ~]\# kubectl config set-cluster kubernetes \

--certificate-authority=/data/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server="https://192.168.20.10:6443" \

--kubeconfig=/data/kubernetes/config/bootstrap.kubeconfig

2.在kubeconfig文件中增加token信息

[root@binary-k8s-master1 ~]\# kubectl config set-credentials "kubelet-bootstrap" \

--token=d7f96b0d86c574d0f64a713608db0922 \

--kubeconfig=/data/kubernetes/config/bootstrap.kubeconfig

#这个token就是之前生成的/data/kubernetes/config/token.csv中的token

3.在kubeconfig文件中增加用户信息

[root@binary-k8s-master1 ~]\# kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=/data/kubernetes/config/bootstrap.kubeconfig

4.指定生成的kubeconfig文件为集群使用

[root@binary-k8s-master1 ~]\# kubectl config use-context default --kubeconfig=/data/kubernetes/config/bootstrap.kubeconfig

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# 6.2.5.创建systemctl脚本并启动服务

1.创建systemctl脚本

[root@binary-k8s-master1 ~]\# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/data/kubernetes/config/kubelet.conf

ExecStart=/data/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

2.启动kubelet服务

[root@binary-k8s-master1 ~]\# systemctl daemon-reload

[root@binary-k8s-master1 ~]\# systemctl start kubelet

[root@binary-k8s-master1 ~]\# systemctl enable kubelet

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

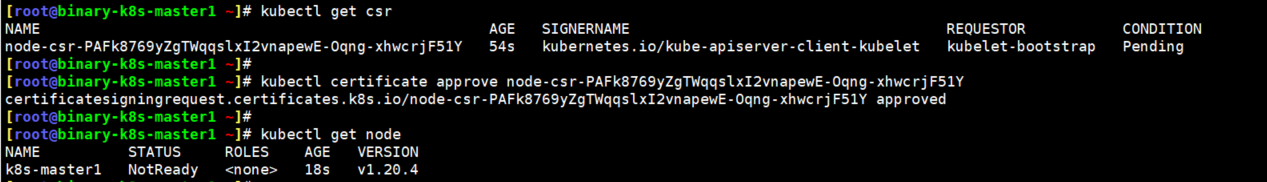

# 6.2.6.将master节点作为node加入集群内部

当kubelet组件启动成功后,就会想apiserver发送一个请求加入集群的信息,只有当master节点授权同意后,才可以正常加入,虽然是master节点部署的node组件,但是也会发生一个加入集群的信息,需要master同意。

当kubelet启动之后,首先会在证书目录生成一个kubelet-client.key.tmp这个文件,当使用kubectl certificate approve命令授权成功node的请求之后,kubelet-client.key.tmp小时,随之会生成一个kubelet-client-current.pem的证书文件,用于与apiserver建立连接,此时再使用kubectl get node就会看到节点信息了。

扩展:如果后期想要修改node的名称,那么就把生成的kubelet证书文件全部删除,然后使用kubectl delete node删除该节点,在修改kubelet配置文件中该节点的名称,然后使用kubectl delete csr删除授权信息,再重启kubelet生成新的授权信息,然后授权通过即可看到新的名字的node节点。

只有当授权通过后,kubelet生成了证书文件,kubelet的端口才会被启动

注意:当kubelet的授权被master请求通后,kube-proxy启动成功后,节点才会正真的加入集群,即使kubectl get node看到的节点是Ready,该节点也是不可用的,必须当kube-proxy启动完毕后,这个节点才算正真的启动完毕<

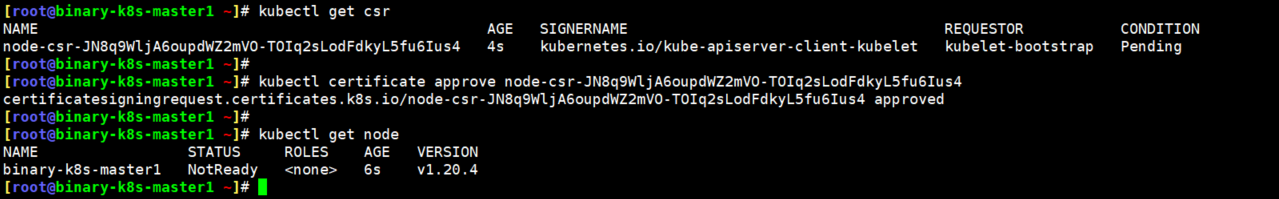

1.直接在master节点上执行如下命令获取请求列表

[root@binary-k8s-master1 ~]\# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-JN8q9WljA6oupdWZ2mVO-TOIq2sLodFdkyL5fu6Ius4 4s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

2.授权同意此节点加入集群

[root@binary-k8s-master1 ~]\# kubectl certificate approve node-csr-JN8q9WljA6oupdWZ2mVO-TOIq2sLodFdkyL5fu6Ius4

certificatesigningrequest.certificates.k8s.io/node-csr-JN8q9WljA6oupdWZ2mVO-TOIq2sLodFdkyL5fu6Ius4 approved

3.查看node节点

[root@binary-k8s-master1 ~]\# kubectl get node

NAME STATUS ROLES AGE VERSION

binary-k8s-master1 NotReady <none> 6s v1.20.4

#此时master节点已经出现在集群节点列表中了

4.查看kubelet端口

[root@binary-k8s-master1 ~]\# netstat -lnpt | grep kubelet

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 29092/kubelet

tcp 0 0 127.0.0.1:41132 0.0.0.0:* LISTEN 29092/kubelet

tcp6 0 0 :::10250 :::* LISTEN 29092/kubelet

tcp6 0 0 :::10255 :::* LISTEN 29092/kubelet

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# 6.3.在master节点部署kube-proxy

# 6.3.1.创建kube-proxy配置文件

[root@binary-k8s-master1 ~]\# vim /data/kubernetes/config/kube-proxy.conf

KUBE_PROXY_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/kubernetes/logs \

--config=/data/kubernetes/config/kube-proxy-config.yml"

2

3

4

5

# 6.3.2.创建kube-proxy参数配置文件

[root@binary-k8s-master1 ~]\# vim /data/kubernetes/config/kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0 #监听地址

metricsBindAddress: 0.0.0.0:10249 #监听端口

clientConnection:

kubeconfig: /data/kubernetes/config/kube-proxy.kubeconfig #kubeconfig文件用于和apiserver通信

hostnameOverride: binary-k8s-master1 #当前节点名称

clusterCIDR: 10.244.0.0/16

2

3

4

5

6

7

8

9

# 6.3.3.生成kubeconfig文件

1.创建证书配置文件

[root@binary-k8s-master1 ~/TLS/k8s]\# vim kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

2.生成证书

[root@binary-k8s-master1 ~/TLS/k8s]\# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2021/09/03 16:04:23 [INFO] generate received request

2021/09/03 16:04:23 [INFO] received CSR

2021/09/03 16:04:23 [INFO] generating key: rsa-2048

2021/09/03 16:04:24 [INFO] encoded CSR

2021/09/03 16:04:24 [INFO] signed certificate with serial number 677418055440191127932354470575565723194258386145

2021/09/03 16:04:24 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

3.查看证书文件

[root@binary-k8s-master1 ~/TLS/k8s]\# ll *proxy*

-rw-r--r--. 1 root root 1009 9月 3 16:04 kube-proxy.csr

-rw-r--r--. 1 root root 230 9月 3 16:04 kube-proxy-csr.json

-rw-------. 1 root root 1679 9月 3 16:04 kube-proxy-key.pem

-rw-r--r--. 1 root root 1403 9月 3 16:04 kube-proxy.pem

4.拷贝证书文件至指定路径

[root@binary-k8s-master1 ~/TLS/k8s]\# cp kube-proxy*.pem /data/kubernetes/ssl/

5.生成kubeconfig文件

#在kubeconfig文件中增加集群apiserver信息

[root@binary-k8s-master1 ~]\# kubectl config set-cluster kubernetes \

--certificate-authority=/data/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server="https://192.168.20.10:6443" \

--kubeconfig=/data/kubernetes/config/kube-proxy.kubeconfig

#在kubeconfig文件中增加证书文件信息

[root@binary-k8s-master1 ~]\# kubectl config set-credentials kube-proxy \

--client-certificate=/data/kubernetes/ssl/kube-proxy.pem \

--client-key=/data/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=/data/kubernetes/config/kube-proxy.kubeconfig

#在kubeconfig文件中增加用户信息

[root@binary-k8s-master1 ~]\# kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=/data/kubernetes/config/kube-proxy.kubeconfig

6.指定生成的kubeconfig文件为集群使用

[root@binary-k8s-master1 ~]\# kubectl config use-context default --kubeconfig=/data/kubernetes/config/kube-proxy.kubeconfig

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

# 6.3.4.创建systemctl脚本管理服务

[root@binary-k8s-master1 ~]\# vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/data/kubernetes/config/kube-proxy.conf

ExecStart=/data/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

2

3

4

5

6

7

8

9

10

11

12

13

# 6.3.4.启动kube-proxy组件

1.启动服务

[root@binary-k8s-master1 ~]\# systemctl daemon-reload

[root@binary-k8s-master1 ~]\# systemctl start kube-proxy

[root@binary-k8s-master1 ~]\# systemctl enable kube-proxy

2.查看端口

[root@binary-k8s-master1 ~]\# netstat -lnpt | grep kube-proxy

tcp6 0 0 :::10249 :::* LISTEN 29354/kube-proxy

tcp6 0 0 :::10256 :::* LISTEN 29354/kube-proxy

2

3

4

5

6

7

8

9

# 6.4.授权apiserver访问kubelet

如果不收取apiserver访问kubelet,那么将无法使用kubectl查看集群的一些信息,比如kubectl logs就无法使用。

实际上就是创建一个rbac资源让apiserver能否访问kubelet的资源。

1.编写资源yaml文件

[root@binary-k8s-master1 ~]\# vim apiserver-to-kubelet-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

2.创建资源

[root@binary-k8s-master1 ~]\# kubectl apply -f apiserver-to-kubelet-rbac.yaml

clusterrole.rbac.authorization.k8s.io/system:kube-apiserver-to-kubelet created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

# 7.部署kubernetes calico网络组件

在6中master节点已经加入集群,但是状态一直处于NotReady状态,就是由于集群没有网络组件导致的,部署好网络组件,master节点立马会成为Ready状态。

1.部署calico

[root@binary-k8s-master1 ~]\# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

2.查看资源状态

[root@binary-k8s-master1 ~]\# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-bnwcl 1/1 Running 0 11m

calico-node-mghdj 1/1 Running 0 11m

3.查看master节点的状态

[root@binary-k8s-master1 ~]\# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready <none> 99m v1.20.4

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

# 8.部署kubernetes node节点

# 8.1.解压二进制文件复制相关组件程序

以下操作仅在node1节点操作即可。

1.准备二进制程序

[root@binary-k8s-node1 ~]\# tar xf kubernetes-server-linux-amd64.tar.gz

[root@binary-k8s-node1 ~]\# mkdir -p /data/kubernetes/{bin,config,ssl,logs}

[root@binary-k8s-node1 ~]\# cp kubernetes/server/bin/{kubelet,kube-proxy} /data/kubernetes/bin/

[root@binary-k8s-node1 ~]\# cp kubernetes/server/bin/kubectl /usr/bin/

2.将master节点上的证书文件拷贝至node节点

[root@binary-k8s-master1 ~]\# scp -rp /data/kubernetes/ssl/* binary-k8s-node1:/data/kubernetes/ssl/

[root@binary-k8s-master1 ~]\# scp -rp /data/kubernetes/config/token.csv root@binary-k8s-node1:/data/kubernetes/config

3.删除从master节点上拷贝过来的kubelet证书

[root@binary-k8s-node1 ~]\# rm -rf /data/kubernetes/ssl/kubelet-client-*

#kubelet证书需要删除,当node节点的kubelet启动后会生成临时证书文件,当master授权通过后,证书文件产生

2

3

4

5

6

7

8

9

10

11

12

13

# 8.2.部署kubelet组件

# 8.2.1.创建kubelet配置文件

[root@binary-k8s-node1 ~]\# vim /data/kubernetes/config/kubelet.conf

KUBELET_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/kubernetes/logs \

--hostname-override=binary-k8s-node1 #注意修改节点名称 \

--network-plugin=cni \

--kubeconfig=/data/kubernetes/config/kubelet.kubeconfig \

--bootstrap-kubeconfig=/data/kubernetes/config/bootstrap.kubeconfig \

--config=/data/kubernetes/config/kubelet-config.yml \

--cert-dir=/data/kubernetes/ssl \

--pod-infra-container-image=pause-amd64:3.0"

2

3

4

5

6

7

8

9

10

11

# 8.2.2.创建kubelet参数配置文件

[root@binary-k8s-node1 ~]\# vim /data/kubernetes/config/kubelet-config.yml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /data/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

# 8.2.3.创建bootstrap-kubeconfig文件

1.在kubeconfig文件中增加集群apiserver信息

[root@binary-k8s-node1 ~]\# kubectl config set-cluster kubernetes \

--certificate-authority=/data/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server="https://192.168.20.10:6443" \

--kubeconfig=/data/kubernetes/config/bootstrap.kubeconfig

2.在kubeconfig文件中增加token信息

[root@binary-k8s-master1 ~]\# kubectl config set-credentials "kubelet-bootstrap" \

--token=d7f96b0d86c574d0f64a713608db0922 \

--kubeconfig=/data/kubernetes/config/bootstrap.kubeconfig

#这个token就是之前生成的/data/kubernetes/config/token.csv中的token

3.在kubeconfig文件中增加用户信息

[root@binary-k8s-master1 ~]\# kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=/data/kubernetes/config/bootstrap.kubeconfig

4.指定生成的kubeconfig文件为集群使用

[root@binary-k8s-master1 ~]\# kubectl config use-context default --kubeconfig=/data/kubernetes/config/bootstrap.kubeconfig

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# 8.2.4.创建systemctl脚本并启动服务

1.编写systemctl服务脚本

[root@binary-k8s-node1 ~]\# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/data/kubernetes/config/kubelet.conf

ExecStart=/data/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

2.启动kubelet服务

[root@binary-k8s-node1 ~]\# systemctl daemon-reload

[root@binary-k8s-node1 ~]\# systemctl start kubelet

[root@binary-k8s-node1 ~]\# systemctl enable kubelet

[root@binary-k8s-node1 ~]\# systemctl status kubelet

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

# 8.2.5.master节点授权同意node节点加入集群

kubelet服务启动后,会生成一个临时证书文件,然后向master节点发送一个csr授权请求,当master节点授权同意后,kubelet-clinet证书文件生成,端口也随之启动,节点正常加入集群。

csr列表的授权信息也会自动清空,如果master节点的授权不及时,也可以重启一下kubelet重新发送一个csr请求。

1.在node节点查看临时证书文件

[root@binary-k8s-node1 ~]\# ll /data/kubernetes/ssl/*.tmp

-rw-------. 1 root root 227 9月 6 11:28 kubelet-client.key.tmp

#只要kubelet启动就会产生一个临时证书文件

2.在master节点查看csr授权请求列表

[root@binary-k8s-master1 ~]\# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-JmO7N8iDvyD0D-2Pu7_yHJ3ngZ5xXfA_TwRevqmHAXI 11s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

3.授权通过

[root@binary-k8s-master1 ~]\# kubectl certificate approve node-csr-JmO7N8iDvyD0D-2Pu7_yHJ3ngZ5xXfA_TwRevqmHAXI

certificatesigningrequest.certificates.k8s.io/node-csr-JmO7N8iDvyD0D-2Pu7_yHJ3ngZ5xXfA_TwRevqmHAXI approved

4.此时临时文件已删除,已经生成kubelet证书文件

[root@binary-k8s-node1 ~]\# ll /data/kubernetes/ssl/kubelet-client*

-rw-------. 1 root root 1236 9月 6 11:28 kubelet-client-2021-09-06-11-28-54.pem

lrwxrwxrwx. 1 root root 59 9月 6 11:28 kubelet-client-current.pem -> /data/kubernetes/ssl/kubelet-client-2021-09-06-11-28-54.pem

5.node1节点成功加入集群

[root@binary-k8s-master1 ~]\# kubectl get node

NAME STATUS ROLES AGE VERSION

binary-k8s-master1 Ready <none> 2d22h v1.20.4

binary-k8s-node1 Ready <none> 4h59m v1.20.4

6.在node节点查看kubelet服务的端口

[root@binary-k8s-node1 ~]\# netstat -lnpt | grep kubelet

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 29220/kubelet

tcp 0 0 127.0.0.1:44151 0.0.0.0:* LISTEN 29220/kubelet

tcp6 0 0 :::10250 :::* LISTEN 29220/kubelet

tcp6 0 0 :::10255 :::* LISTEN 29220/kubelet

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

# 8.3.部署kube-proxy组件

# 8.3.1.创建kube-proxy配置文件

[root@binary-k8s-node1 ~]\# vim /data/kubernetes/config/kube-proxy.conf

KUBE_PROXY_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/kubernetes/logs \

--config=/data/kubernetes/config/kube-proxy-config.yml"

2

3

4

5

# 8.3.2.创建kube-proxy参数配置文件

[root@binary-k8s-node1 ~]\# vim /data/kubernetes/config/kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0 #监听地址

metricsBindAddress: 0.0.0.0:10249 #监听端口

clientConnection:

kubeconfig: /data/kubernetes/config/kube-proxy.kubeconfig #kubeconfig文件用于和apiserver通信

hostnameOverride: binary-k8s-node1 #当前节点名称

clusterCIDR: 10.244.0.0/16

2

3

4

5

6

7

8

9

# 8.3.3.生成kube-config文件

由于kube-proxy的证书文件在8.1中已经从master节点拷贝到node节点了,因此直接生成kubeconfig文件即可。

集群中不同节点的组件都要用同一个证书文件。

1.生成kubeconfig文件

#在kubeconfig文件中增加集群apiserver信息

[root@binary-k8s-node1 ~]\# kubectl config set-cluster kubernetes \

--certificate-authority=/data/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server="https://192.168.20.10:6443" \

--kubeconfig=/data/kubernetes/config/kube-proxy.kubeconfig

#在kubeconfig文件中增加证书文件信息

[root@binary-k8s-node1 ~]\# kubectl config set-credentials kube-proxy \

--client-certificate=/data/kubernetes/ssl/kube-proxy.pem \

--client-key=/data/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=/data/kubernetes/config/kube-proxy.kubeconfig

#在kubeconfig文件中增加用户信息

[root@binary-k8s-node1 ~]\# kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=/data/kubernetes/config/kube-proxy.kubeconfig

2.指定生成的kubeconfig文件为集群使用

[root@binary-k8s-node1 ~]\# kubectl config use-context default --kubeconfig=/data/kubernetes/config/kube-proxy.kubeconfig

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# 8.3.4.创建systemctl脚本管理服务

[root@binary-k8s-node1 ~]\# vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/data/kubernetes/config/kube-proxy.conf

ExecStart=/data/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.targ

2

3

4

5

6

7

8

9

10

11

12

13

# 8.3.5.启动kube-proxy组件

1.启动服务

[root@binary-k8s-node1 ~]\# systemctl daemon-reload

[root@binary-k8s-node1 ~]\# systemctl start kube-proxy

[root@binary-k8s-node1 ~]\# systemctl enable kube-proxy

2.查看端口

[root@binary-k8s-node1 ~]\# netstat -lnpt | grep kube-proxy

tcp6 0 0 :::10249 :::* LISTEN 26954/kube-proxy

tcp6 0 0 :::10256 :::* LISTEN 26954/kube-proxy

2

3

4

5

6

7

8

9

# 8.4.快速增加新的node节点

二进制部署的程序特别好的一个地方就在于,能够快速部署一个新的服务,做法就是直接拷贝已经部署好的目录到一个新的位置,改改其中的参数即可启动使用了。

# 8.4.1.将kubelet和kube-proxy目录拷贝至新的node节点

要拷贝kubelet和kube-proxy部署目录以及systemctl启动脚本文件。

[root@binary-k8s-node1 ~]\# scp -rp /data/kubernetes root@binary-k8s-node2:/data

[root@binary-k8s-node1 ~]\# scp /usr/lib/systemd/system/kube* root@binary-k8s-node2:/usr/lib/systemd/system/

2

# 8.4.2.配置并启动kubelet组件

1.删除没用的证书文件

[root@binary-k8s-node2 ~]\# rm -rf /data/kubernetes/ssl/kubelet-client-*

2.修改kubelet配置文件中的节点名称

[root@binary-k8s-node2 ~]\# vim /data/kubernetes/config/kubelet.conf

KUBELET_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/data/kubernetes/logs \

--hostname-override=binary-k8s-node2 \

--network-plugin=cni \

--kubeconfig=/data/kubernetes/config/kubelet.kubeconfig \

--bootstrap-kubeconfig=/data/kubernetes/config/bootstrap.kubeconfig \

--config=/data/kubernetes/config/kubelet-config.yml \

--cert-dir=/data/kubernetes/ssl \

--pod-infra-container-image=pause-amd64:3.0"

#将--hostname-override值修改为当前节点名称即可

3.启动kubelet

[root@binary-k8s-node2 ~]\# systemctl daemon-reload

[root@binary-k8s-node2 ~]\# systemctl start kubelet

[root@binary-k8s-node2 ~]\# systemctl enable kubelet

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# 8.4.3.master节点授权新node节点的请求

1.master节点查看授权信息列表

[root@binary-k8s-master1 ~]\# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-u_AHUS7T5rku-hnhnGsGi8uGBqlgMquOq_3oq6jrOyE 48s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

2.授权通过node节点的kubelet

[root@binary-k8s-master1 ~]\# kubectl certificate approve node-csr-u_AHUS7T5rku-hnhnGsGi8uGBqlgMquOq_3oq6jrOyE

certificatesigningrequest.certificates.k8s.io/node-csr-u_AHUS7T5rku-hnhnGsGi8uGBqlgMquOq_3oq6jrOyE approved

3.成功加入集群

[root@binary-k8s-master1 ~]\# kubectl get node

NAME STATUS ROLES AGE VERSION

binary-k8s-master1 Ready <none> 2d23h v1.20.4

binary-k8s-node1 Ready <none> 5h54m v1.20.4

binary-k8s-node2 Ready <none> 1s v1.20.4

4.查看kubelet的端口

[root@binary-k8s-node2 ~]\# netstat -lnpt | grep kube

tcp 0 0 127.0.0.1:41121 0.0.0.0:* LISTEN 16694/kubelet

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 16694/kubelet

tcp6 0 0 :::10250 :::* LISTEN 16694/kubelet

tcp6 0 0 :::10255 :::* LISTEN 16694/kubelet

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

# 8.4.4.配置并启动kube-proxy组件

1.修改kube-proxy参数配置文件中的主机名

[root@binary-k8s-node2 ~]\# vim /data/kubernetes/config/kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /data/kubernetes/config/kube-proxy.kubeconfig

hostnameOverride: binary-k8s-node2

clusterCIDR: 10.244.0.0/16

2.启动kubelet

[root@binary-k8s-node2 ~]\# systemctl daemon-reload

[root@binary-k8s-node2 ~]\# systemctl start kube-proxy

[root@binary-k8s-node2 ~]\# systemctl enable kube-proxy

3查看kube-proxy端口

[root@binary-k8s-node2 ~]\# netstat -lnpt | grep kube

tcp 0 0 127.0.0.1:41121 0.0.0.0:* LISTEN 16694/kubelet

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 16694/kubelet

tcp6 0 0 :::10249 :::* LISTEN 20410/kube-proxy

tcp6 0 0 :::10250 :::* LISTEN 16694/kubelet

tcp6 0 0 :::10255 :::* LISTEN 16694/kubelet

tcp6 0 0 :::10256 :::* LISTEN 20410/kube-proxy

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

# 9.为集群部署coredns组件

# 9.1.部署coredns组件

1.coredns.yaml文件内容

[root@binary-k8s-master1 ~]\# cat coredns.yaml

# Warning: This is a file generated from the base underscore template file: coredns.yaml.base

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations: